5 Inference for simple linear regression

Learning goals

- Explain how statistical inference is used to draw conclusions about a population model coefficient

- Construct confidence intervals using bootstrap simulation

- Conduct hypothesis tests using permutation

- Explain how the Central Limit Theorem is applied to inference for the model coefficient

- Conduct statistical inference using mathematical models based on the Central Limit Theorem

- Interpret results from statistical inference in the context of the data

- Explain the connection between hypothesis tests and confidence intervals

5.1 Introduction: Access to playgrounds

The Trust for Public Land is a non-profit organization that advocates for equitable access to outdoor spaces in cities across the United States. In the 2021 report Parks and an Equitable Recovery, the organization stated that “parks are not just a nicety—they are a necessity” (The Trust for Public Land 2021). The report details the many health, social, and environmental benefits of having ample access to public outdoor space in cities, along with the various factors that impede the access to parks and other outdoor space for some residents.

One type of outdoor space the authors study in the report is playgrounds. The report describes playgrounds as outdoor spaces that “bring children and adults together” (The Trust for Public Land 2021, 13) and a place that was important for distributing “fresh food and prepared meals to those in need, particularly school-aged children” (The Trust for Public Land 2021, 9) during the global COVID-19 pandemic.

Given the impact of playgrounds for both children and adults in a community, we want to understand factors associated with variability in the access to playgrounds. In particular, we want to (1) investigate whether local government spending is useful in understanding variability in playground access, and if so, (2) quantify the true relationship between local government spending and playground access.

The data includes information on 97 of the most populated cities in the United States (US) in the year 2020. The data were originally collected by the Trust for Public Land and was a featured as part of the TidyTuesday weekly data visualization challenge in June 2021 (Community 2024). The data are in parks.csv. The analysis in this chapter focuses on two variables:

per_capita_expend: Total amount the city government spent per resident in 2020 in US dollars (USD). This is a measure of how much a city invests in services and facilities for its residents. We refer to it as a city’s “per capita expenditure”.playgrounds: Number of playgrounds per 10,000 residents in 2020

Which of the following do you think best describes the relationship between per_capita_expend and playgrounds?1

- The relationship is positive.

- The relationship is negative.

- There is no relationship.

5.1.1 Exploratory data analysis

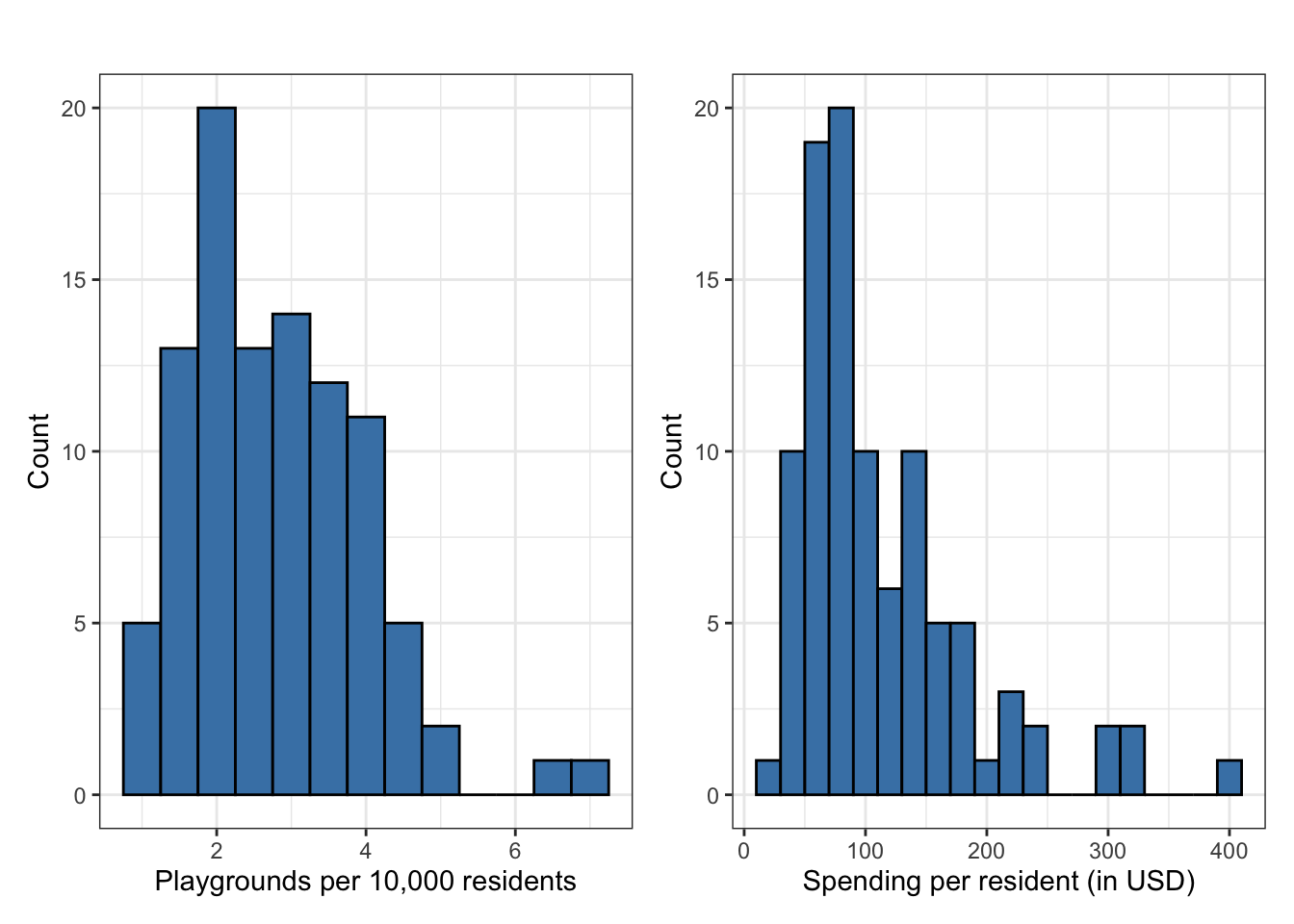

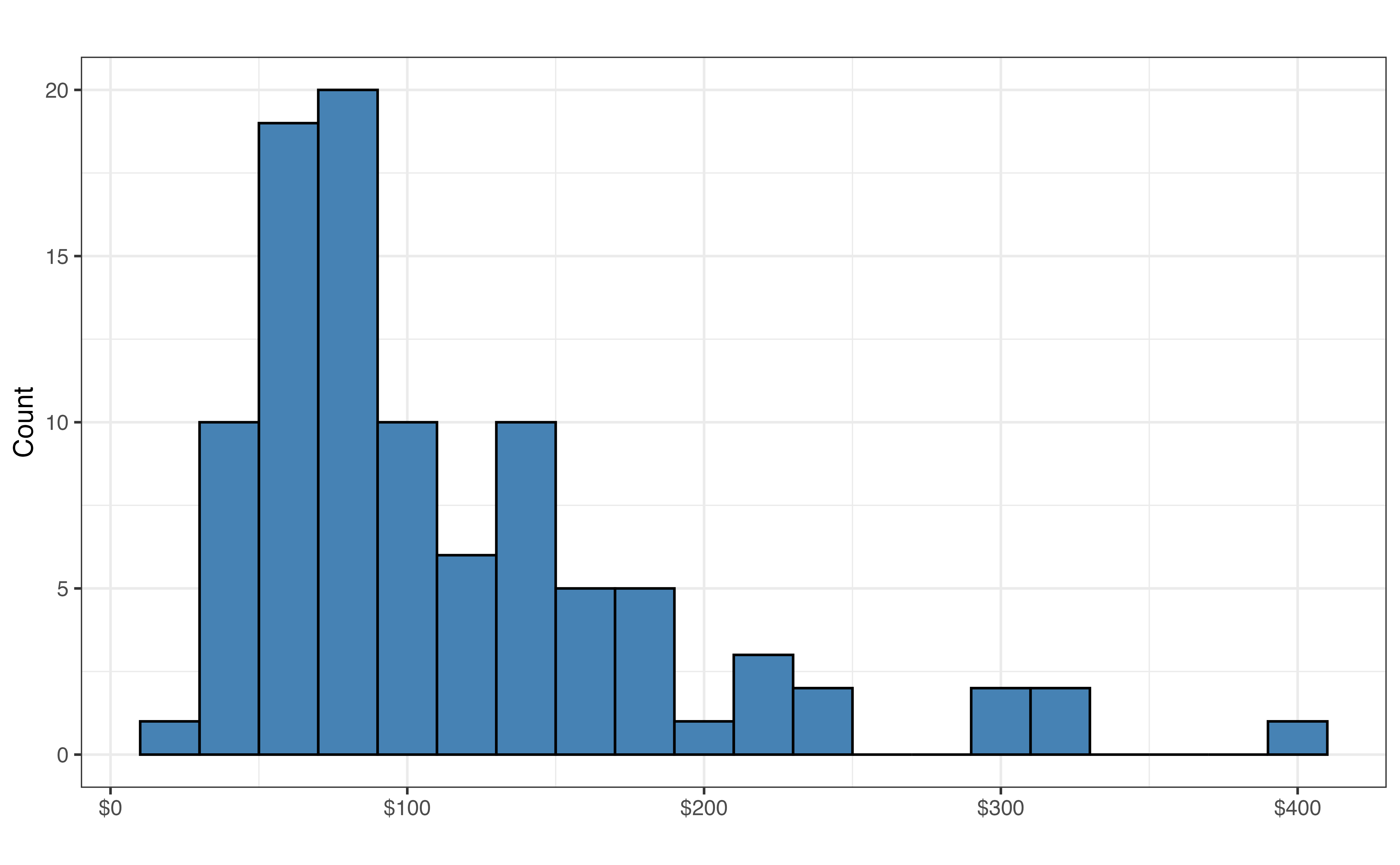

The visualizations and summary statistics for univariate and bivariate exploratory data analysis are in Figure 5.1 and Table 5.1.

playgrounds and per_capita_expend

The distribution of playgrounds, the number of playgrounds per 10,000 residents (the response variable), is unimodal and right-skewed. The center of the distribution is the median of about 2.6 playgrounds per 10,000 residents, and the the spread of the middle 50% of the distribution (the IQR) is 1.7. There appear to be two potential outlying cities with more than 6 playgrounds per 10,000 residents, indicating high playground access relative to the other cities in the data set.

The distribution of per_capita_expend, a city’s expenditure per resident (the predictor variable), is also unimodal and right-skewed. The center of the distribution is around 89 dollars per resident, and the middle 50% of the distribution has a spread of about 77 dollars per resident. Similar to the response variable, there are some potential outliers. There are 5 cities that invests more than 300 dollars per resident.

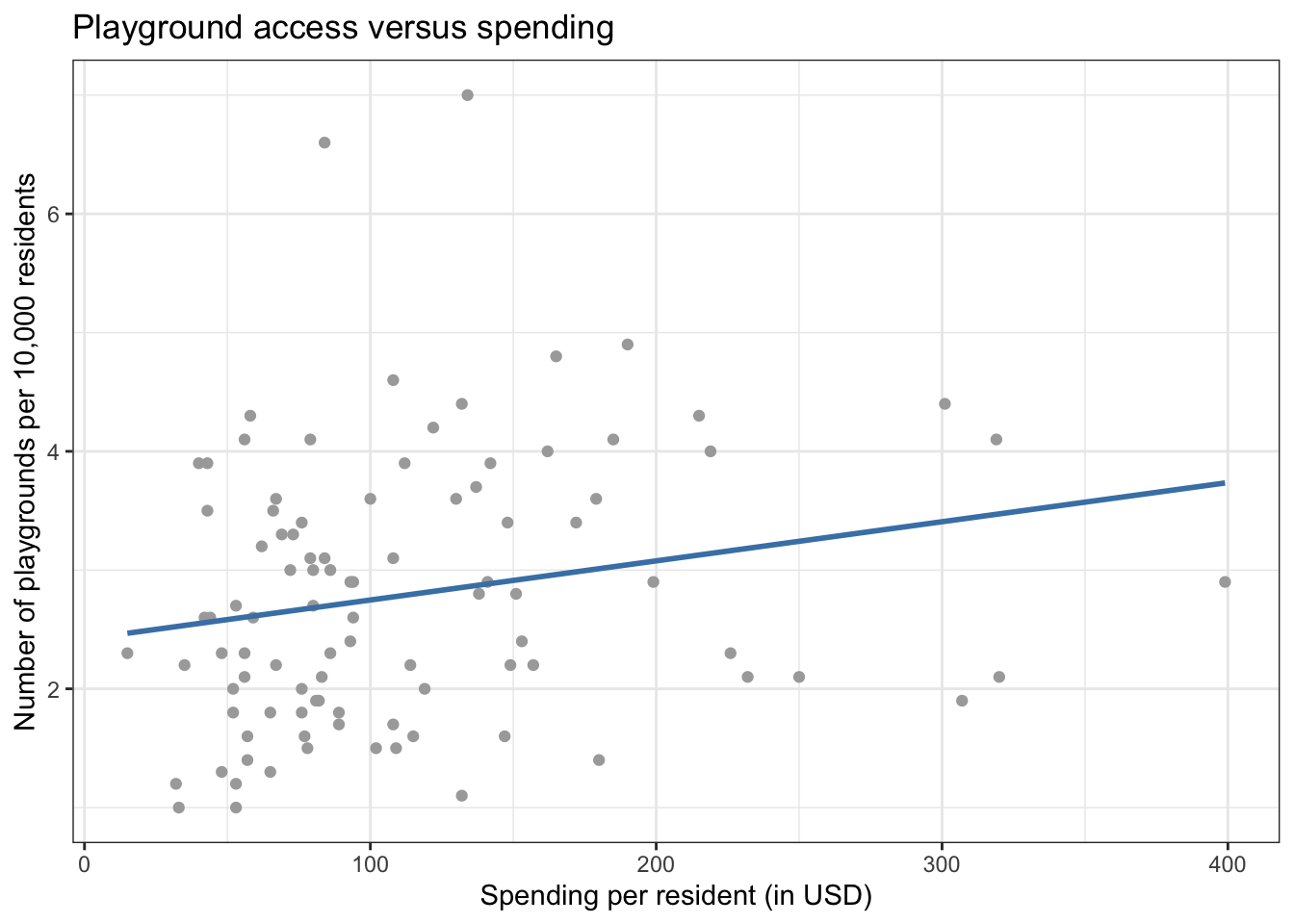

From Figure 5.2 there appears to be a positive relationship between a city’s per capita expenditure and the number of playgrounds per 10,000 residents. The correlation is 0.206, indicating the relationship between playground access and city expenditure is not strong.This is partially influenced by the outlying observations in that have relatively low values of per capita expenditure but high numbers of playgrounds per 10,000 residents.

Linear regression model

To better explore this relationship, we fit a simple linear regression model of the form

The output of the fitted regression model is Table 5.2.

- Interpret the slope in the context of the data.

- Does the intercept have a meaningful interpretation? 2

From the sample of 97 cities in 2020, the estimated slope of 0.003. This estimated slope is likely close to but not the exact value of the true population slope we would obtain using data from every city in the United States. Based on the equation alone, we are also not sure if this slope indicates an actual meaningful relationship between the two variables, or if the slope is due to random variability in the data. We will use statistical inference methods to help answer these questions and use the model to draw conclusions about the relationship between per_capita_expend and playgrounds beyond these 97 cities.

5.2 Objectives of statistical inference

Based on the regression output in Table 5.2, for each additional dollar in per capita expenditure, we expect there to be 0.003 more playgrounds per 10,000 residents, on average.

The estimate 0.003 is the “best guess” of the relationship between per capita expenditure and the number of playgrounds per 10,000 residents; however, this is likely not the exact value of the relationship in the population of all US cities. We can use statistical inference, the process of drawing conclusions about the population based on the analysis of the sample data. More specifically, we will use statistical inference to draw conclusions about the population-level slope,

There are two types of statistical inference procedures:

- Hypothesis tests: Test a specific claim about the population-level slope

- Confidence intervals: A range of values that the population-level slope may reasonably take

This chapter focuses on statistical inference for the slope

As we’ll see throughout the chapter, a key component of statistical inference is quantifying the sampling variability, sample-to-sample variability in the statistic that is the “best guest” estimate for the parameter. For example, when we conduct statistical inference on the slope of per capita expenditure per_capita_expend to predict playgrounds to obtain an estimate of the slope. The idea is that

While the process described above would be an approach for constructing the sampling distribution, it is not feasible to collect a lot of new samples in practice. Instead, there are two approaches for obtaining the sampling distribution, in order to quantify the variability in the estimated slopes and conduct statistical inference.

Simulation-based methods: Quantify the sampling variability by generating a sampling distribution directly from the data

Theory-based methods: Quantify the sampling variability using mathematical models based on the Central Limit Theorem

Section 5.4 and Section 5.6 introduce statistical inference using simulation-based methods, and Section 5.8 introduces inference using theory-based methods. Before we get into those details, however, let’s introduce more of the foundational ideas underlying simple linear regression and how they relate to statistical inference.

5.3 Foundations of simple linear regression

In Section 4.3.1, we introduced the statistical model for simple linear regression

such that

Equation 5.4 is the assumed distribution of the response variable conditional on the predictor variable under the simple linear regression model. Therefore, we conduct simple linear regression assuming Equation 5.4 is true. Based on the equation we specify the assumptions that are made when we do simple linear regression. More specifically, the following assumptions are made based on Equation 5.4:

The distribution of the response

The expected value (mean) of

The variance

The error terms for each observation,

Whenever we fit linear regression models and conduct inference on the slope, we do so under the assumption that some or all of these four statements hold. In Chapter 6, we will discuss how to check if these assumptions hold in a given analysis. As we might expect, these assumptions do not always perfectly hold in practice, so we will also discuss circumstances in which an assumption is necessary versus when an assumption can be relaxed. For the remainder of this chapter, however, we will proceed as if all four assumptions hold.

5.4 Bootstrap confidence intervals

We’ll begin by looking at simulation-based methods for statistical inference: bootstrap confidence intervals and permutation tests (Section 5.9.2). In these procedures, we use the sample data to construct a simulated sampling distribution to quantify the sample-to-sample variability in

A confidence interval is a range of values the population-level slope

In order to obtain this range of values we must understand the sampling variability of the statistic. Suppose we repeatedly take samples of size

We then fit the regression model and compute

Why do we sample with replacement when generating a bootstrap sample? How would a bootstrap sample compare to the original sample data if sampling is done without replacement?3

5.4.1 Constructing a bootstrap confidence interval for

A bootstrap confidence interval for the population slope,

- Generate

- Fit the linear regression model to each of the

- Collect the

- Use the distribution from the previous step to calculate the

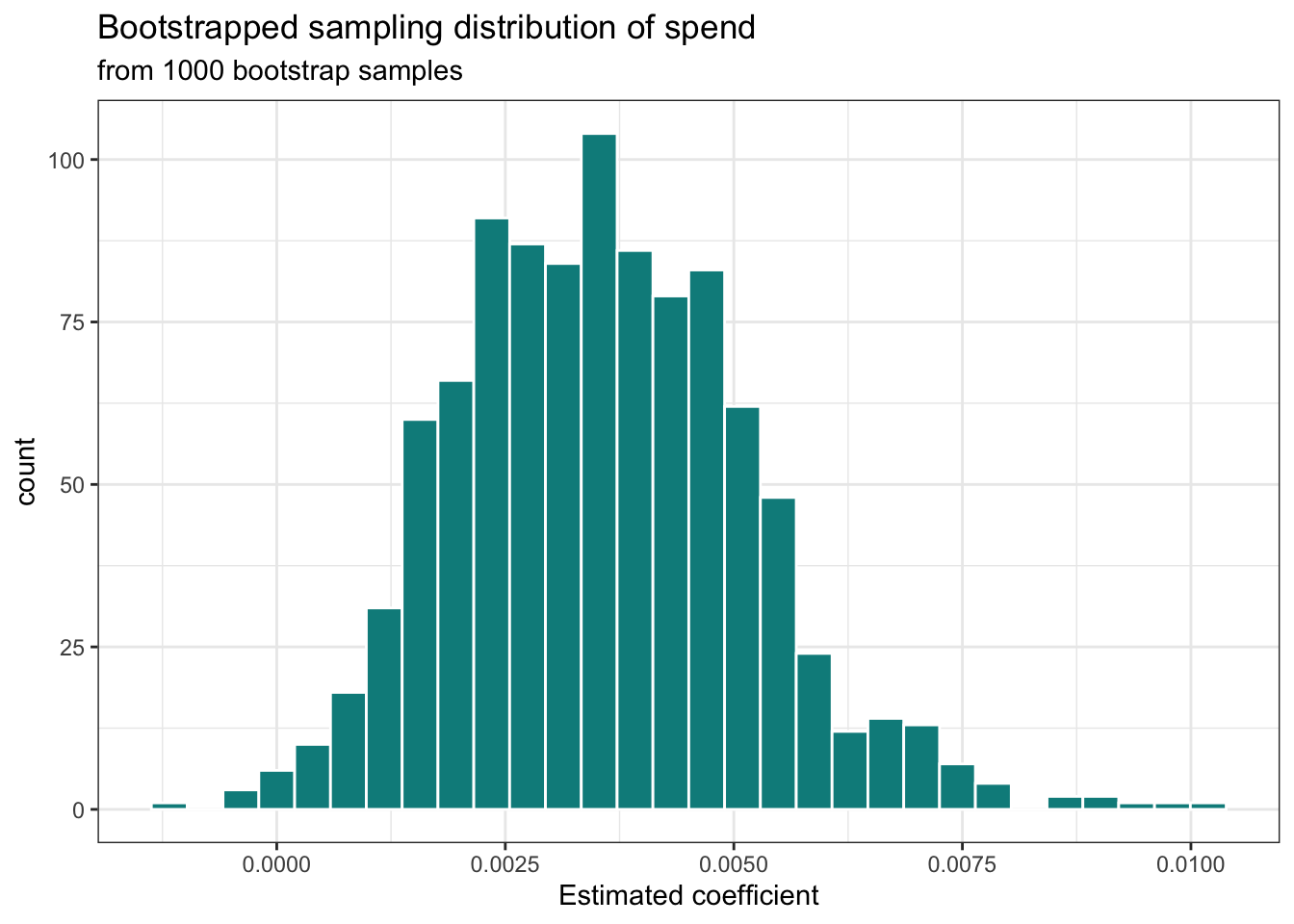

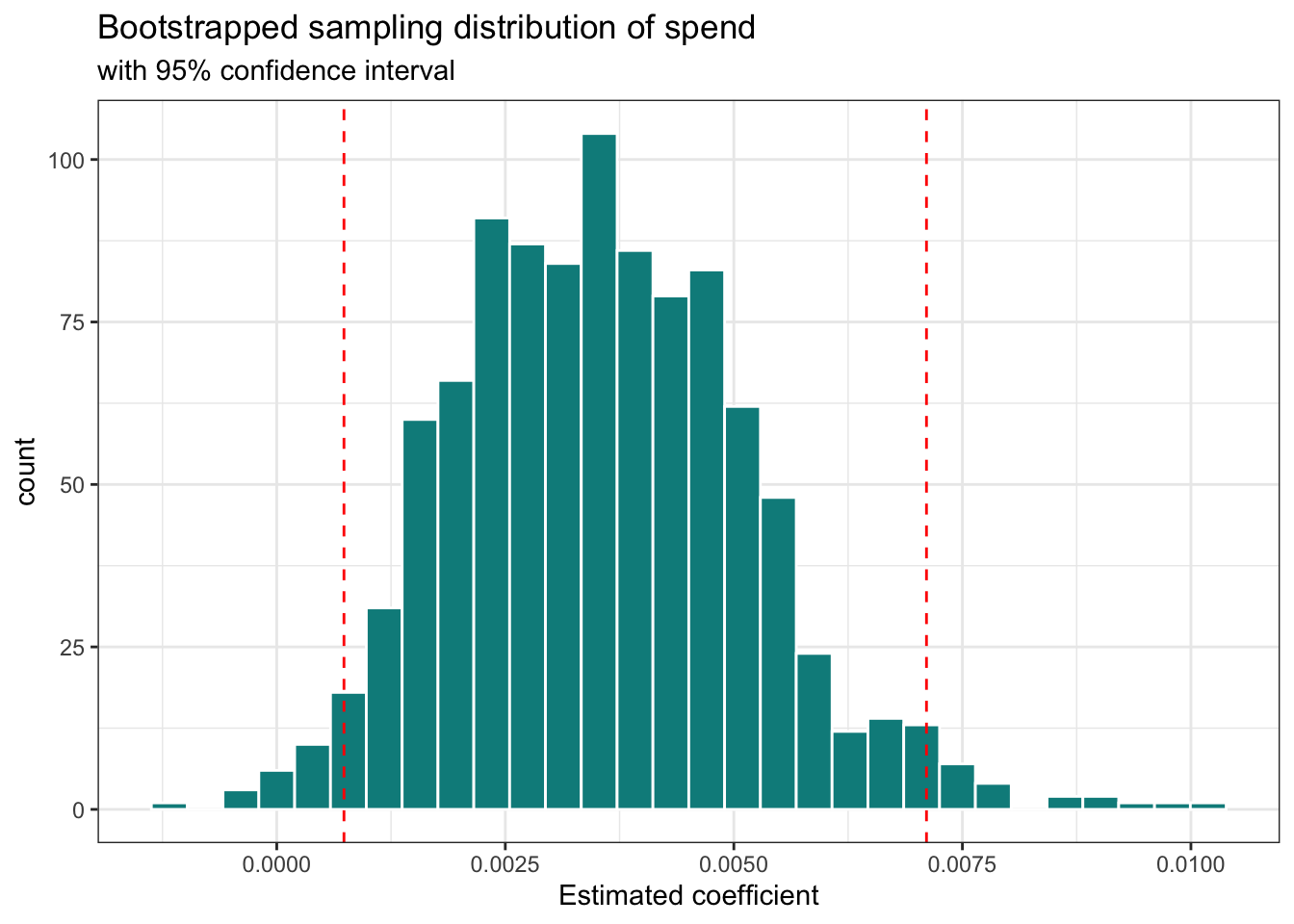

Using these four steps, let’s construct the 95% confidence for the population slope per_capita_spend and playgrounds.

- Generate 1000 bootstrap samples (97 observations in each sample) by sampling with replacement from the current sample data of 97 observations. The first 10 observations from the first bootstrapped sample are shown in Table 5.3.

Why are there 97 observations in each bootstrap sample?4

Next, we fit a linear model of the form in Equation 5.1 to each of the 1000 bootstrap samples. The estimated slopes and intercepts for the first three bootstrap samples are shown in Table 5.4.

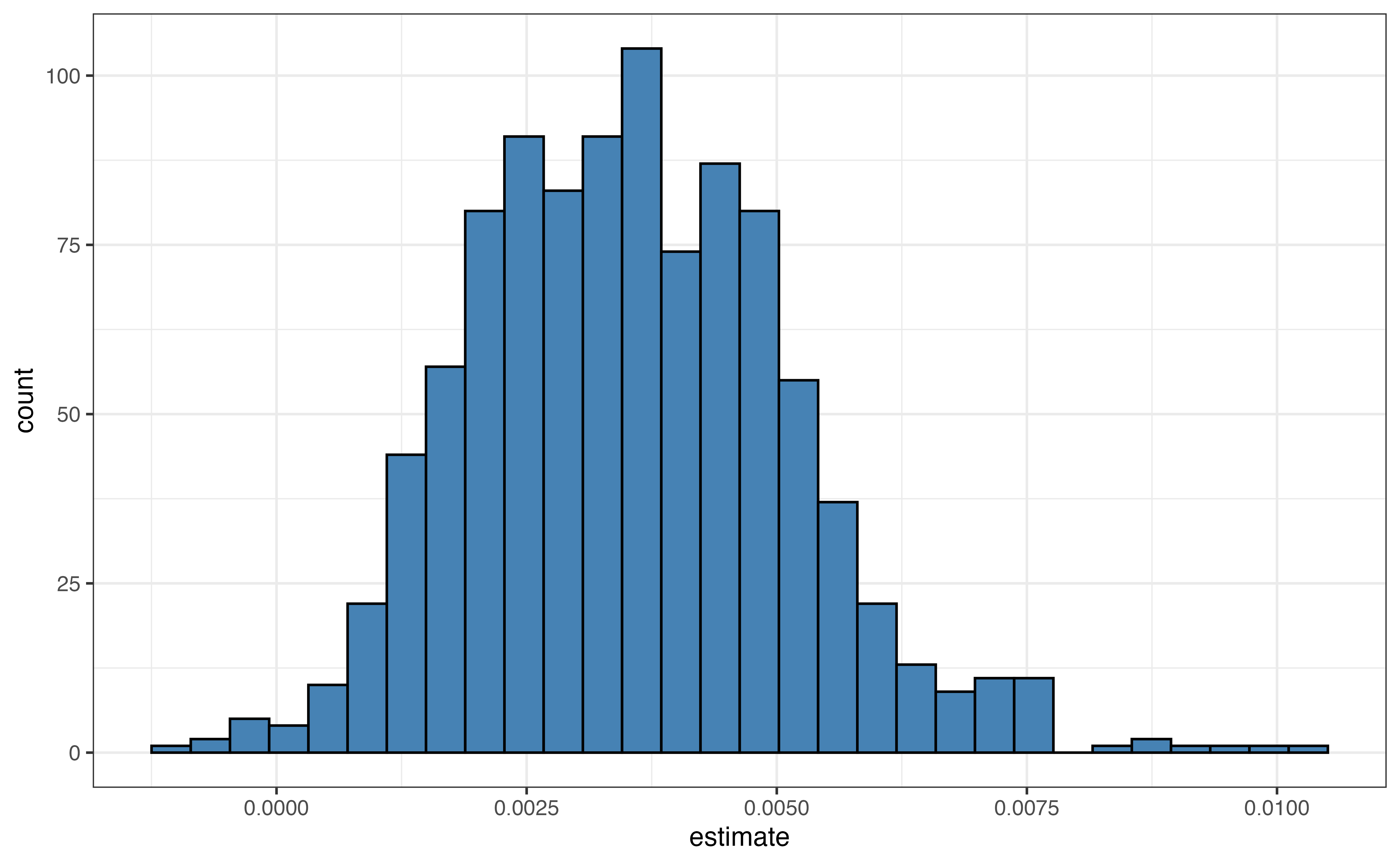

We are focused on inference for

per_capita_expend, so we collect estimated slopes ofper_capita_expendto make the bootstrap distribution. This is the approximation of the sampling distribution of

How many values of

- As the final step, we use the bootstrap distribution to calculate the lower and upper bounds of the 95% confidence interval. These bounds are calculated as the points that mark off the middle 95% of the distribution. These are the points that at the

The 95% bootstrapped confidence interval for per_capita_expend is 0.001 to 0.007.

The points at what percentiles in the bootstrap distribution mark the lower and upper bounds for a

- 90% confidence interval?

- 98% confidence interval?6

5.4.2 Interpreting the interval

The general interpretation of the 95% confidence interval for per_capita_expend is

We are 95% confident that the interval 0.001 to 0.007 contains the population slope for per capita expenditure in the model of the relationship between a city’s per capita expenditure and number of playgrounds per 10,000 residents.

Though this interpretation indicates the range of values that may reasonably contain the true population slope for per_capita_expend, it still requires the reader to further interpret what it means about the relationship between per_capita_expend and playgrounds. It is more informative to interpret the confidence interval in a way that also utilizes the interpretation of the slope from Section 5.1.1 , so the reader more clearly understands what the confidence interval is conveying. Thus, a more complete and informative interpretation of the confidence interval is as follows:

We are 95% confident that for each additional dollar a city spends per resident, there are between 0.001 to 0.007 more playgrounds per 10,000 residents, on average.

This interpretation not only indicates the range of values as before, but it also clearly describes what this range means in terms of the average change in playgrounds per 10,000 residents as a city’s per capita expenditure increases.

5.4.3 What does “confidence” mean?

The beginning of the interpretation for a confidence interval is “We are

In reality we don’t know the value of the population slope (if we did, we wouldn’t need statistical inference!), so we can’t definitively conclude if the interval constructed in Section 5.4.1 is one of the

Thus far, we have used a confidence interval to produce a plausible range of values for the population slope. We can also test specific claims about the population slope using another inferential procedure called hypothesis testing.

5.5 Hypothesis tests

Hypothesis tests are used to evaluate a claim about about a population parameter. The claim could be based on previous research, an idea a research or business team wants to explore, or a general statement about the parameter. We will again focus on the population slope

5.5.1 Define the hypotheses

The first step of any hypothesis test (or trial) is to define the hypotheses that will be evaluated. These hypotheses are called the null and alternative. The null hypothesis

In the US judicial system, a defendant is deemed innocent unless proven otherwise. Therefore, the null and alternative hypotheses are

5.5.2 Evaluate the evidence

The primary component of trial (or hypothesis test) is a presenting and evaluating the evidence. In a trial, this is the point when the evidence is presented and it is evaluated under the assumption the null hypothesis (defendant is not guilty) is true. Thus, the lens in which the evidence is being evaluated is “given the defendant is not guilty, how likely is it that this evidence would exist?”

For example, suppose an individual is on trial for a robbery at a jewelry store. The null hypothesis is that they are not guilty and did not rob the jewelry store. The alternative hypothesis is they are guilty and did rob the jewelry store. If there is evidence that the person was in a different city during the time of the jewelry store robbery, the evidence would be more in support of the null hypothesis of innocence. It seems plausible the individual could have been in a different city at the time of the robbery if the null hypothesis is true. Alternatively, if some of the missing jewelry was found in the individual’s car, the evidence would seem to be strongly in support of the alternative hypothesis. If the null hypothesis is true, it does not seem likely that the individual would have the missing jewelry in their car.

In hypothesis testing, the “evidence” being assessed is the analysis of the sample data. Thus we are considering the question “given the null hypothesis is true, how likely is it to observe the results seen in the sample data?” We will introduce approaches to address this question using simulation-based methods in Section 5.6 and theory-based methods in Section 5.8.3.

5.5.3 Make a conclusion

There are two typical conclusions in a trial in the US judicial system - the defendant is guilty or not guilty based on the evidence. The criteria to conclude the alternative that a defendant is guilty is that the strength of evidence must be “beyond reasonable doubt”. If there is sufficiently strong evidence against the null hypothesis of not guilty, then the conclusion is the alternative hypothesis that the defendant is guilty. Otherwise, the conclusion is that the defendant is not guilty, indicating the evidence against the null was not strong enough to otherwise refute it. Note that this is the not the same as “accepting” the null hypothesis but rather indicating that there wasn’t enough evidence to suggest otherwise.

Similarly in hypothesis testing, we will use a predetermined threshold to assess if the evidence against the null hypothesis is strong enough to reject the null hypothesis and conclude the alternative, or if there is not enough evidence “beyond a reasonable doubt” to draw a conclusion other than the assumed null hypothesis.

5.6 Permutation tests

Now that we have explained the general process of hypothesis testing, let’s take a look at hypothesis testing using a simulation-based approach, called a permutation test.

The four steps of permutation test for a slope

- State the null and alternative hypotheses.

- Generate the null distribution.

- Calculate the p-value.

- Draw a conclusion.

These steps are described in detail in the context of the hypothesis test for the slope in Equation 5.1.

5.6.1 State the hypotheses

As defined in Section 5.5 the null hypothesis (per_capita_expend and playgrounds. The null hypothesis is the baseline condition of there being no linear relationship between the two variables. We use this claim to define the alternative hypothesis.

- Null hypothesis: There is no linear relationship between playgrounds per 10,000 residents and per capita expenditure. The slope of

per_capita_expendis equal to 0. - Alternative hypothesis: There is a linear relationship between playgrounds per 10,000 residents and per capita expenditure. The coefficient of

per_capita_expendis not equal to 0.- Note that we have not hypothesized whether the slope is positive or negative.

The hypotheses are defined specifically in terms of the linear relationship between the two variables, because we are ultimately drawing conclusions about the slope

Mathematical statement of hypotheses for

Suppose there is a response variable

The hypotheses for testing whether there is a linear relationship between

One vs. two-sided hypotheses

The alternative hypothesis defined in Equation 5.5 is “not equal to 0”. This is the alternative hypothesis corresponding to a two-sided hypothesis test, because it includes the scenarios in which

A one-sided hypothesis test imposes some information about the direction of the parameter, that is positive (

Because a two-sided hypothesis test makes no assumption about the direction of the relationship between the response variable and predictor variable. It is a good starting point for drawing conclusions about the relationship between the two variables. From the two-sided hypothesis, we will conclude whether there is or is not sufficient statistical evidence of a linear relationship between the response and predictor. With this conclusion, we cannot determine if the relationship between the variables is positive or negative without additional analysis. We use a confidence interval (Section 5.4) to make specific conclusions about the direction and magnitude of the relationship.

5.6.2 Simulate the null distribution

Recall that hypothesis tests are conducted assuming the null hypothesis

To assess the evidence, we will use a simulation-based method to approximate the sampling distribution of the estimated slope

In permutation sampling the values of the predictor variable are randomly shuffled and paired with values of the response, thus generating a new sample of the same size as the original data. The process of randomly pairing the values of the response and the predictor variables simulates the null hypothesized condition that there is no linear relationship between the two variables.

The steps for simulating the null distribution using permutation sampling are the following:

- Generate

- Fit the linear regression model to each of the

- Collect the

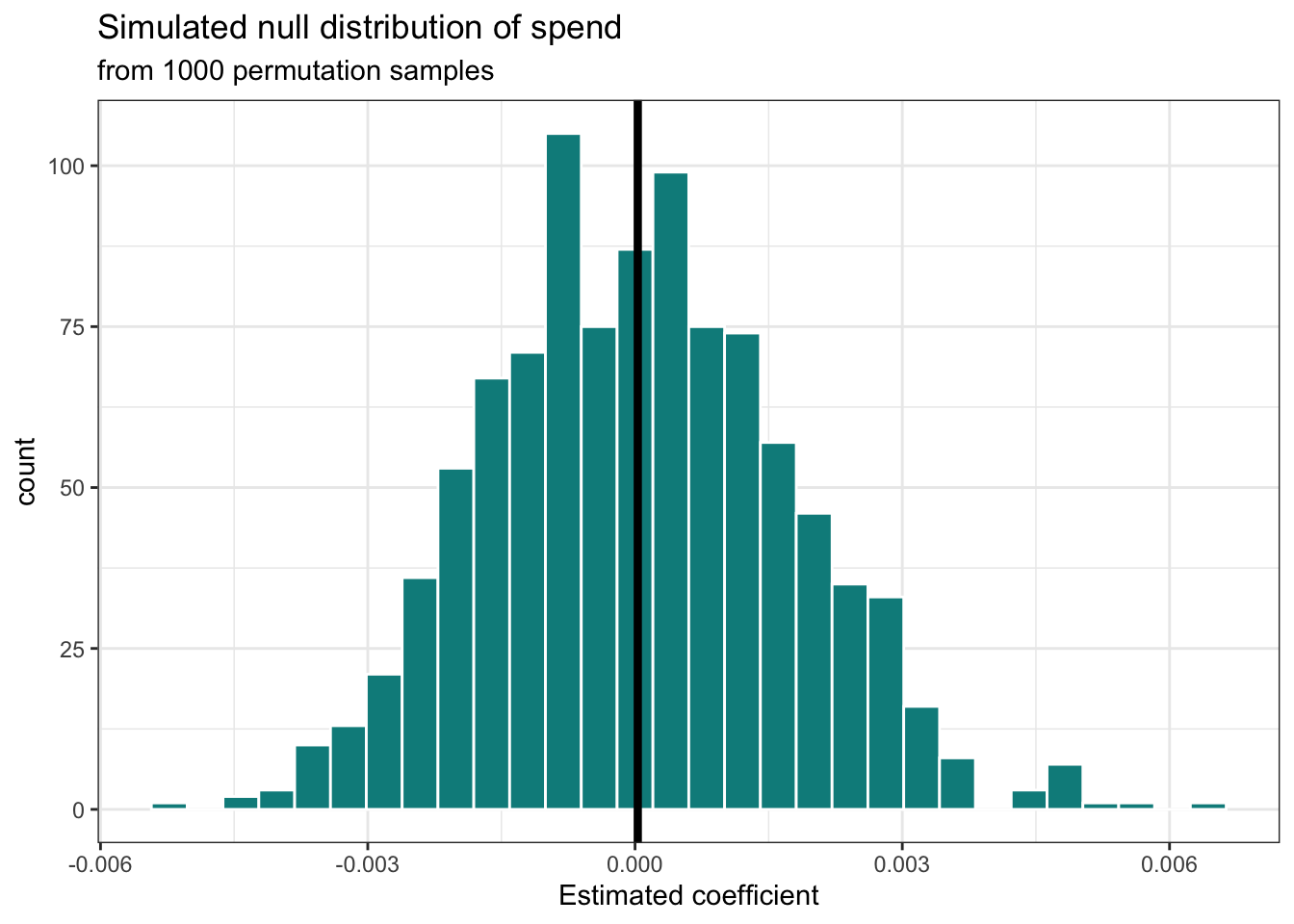

Let’s simulate the null distribution to test the hypotheses in Equation 5.5 for the parks data.

- First we generate 1,000 permutation samples, such that in each sample, we permute the values of

per_capita_expend, randomly pairing each to a value ofplaygrounds. This is to simulate the scenario in which there is no linear relationship betweenper_capita_expendandplaygrounds. The first 10 rows of the first permutation sample are in Table 5.7.

- Next, we fit a linear regression model to each of the 1000 permutation samples. This gives us 1000 estimates of the slope and intercept. The slopes estimated from the first 10 permutation samples are shown in Table 5.8.

| replicate | term | estimate |

|---|---|---|

| 1 | per_capita_expend | 0.000 |

| 2 | per_capita_expend | 0.001 |

| 3 | per_capita_expend | 0.001 |

| 4 | per_capita_expend | -0.002 |

| 5 | per_capita_expend | -0.002 |

| 6 | per_capita_expend | 0.004 |

| 7 | per_capita_expend | -0.002 |

| 8 | per_capita_expend | -0.002 |

| 9 | per_capita_expend | -0.001 |

| 10 | per_capita_expend | 0.001 |

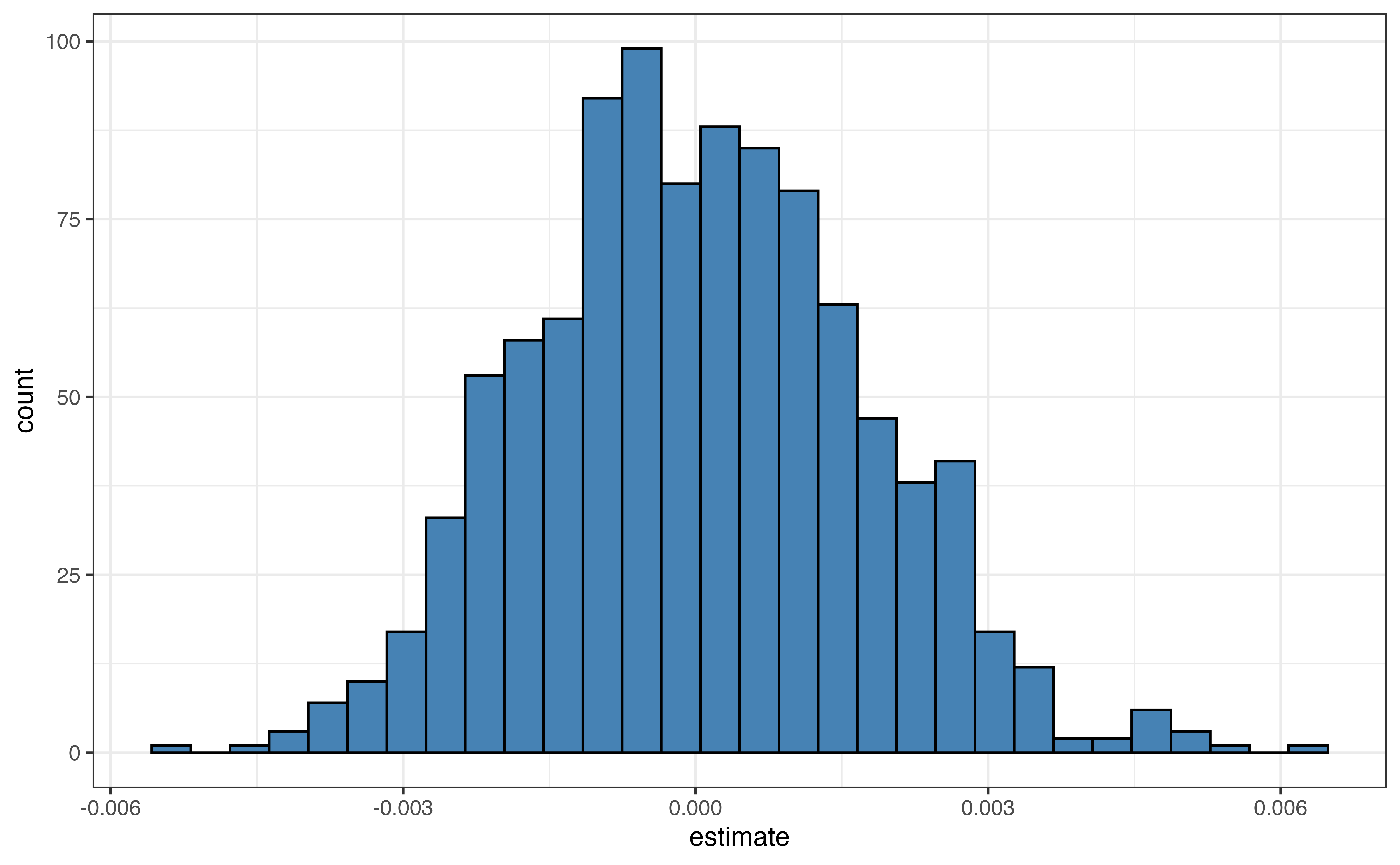

Next, we collect the estimated slopes from the previous step to construct the simulated null distribution. We will use this distribution to assess the strength of the evidence from the original sample data against the null hypothesis.

Note that the distribution visualized in Figure 5.5 and summarized in Table 5.9 is approximately unimodal, symmetric, and looks similar to the normal distribution. As the number of iterations (permutation samples) increases, the simulated null distribution will be closer and closer to a normal distribution. Additionally, the center of the distribution is approximately 0, the null hypothesized value. The standard deviation of this distribution 0.002 is an estimate of the standard error of

5.6.3 Calculate p-value

The null distribution helps us understand the values per_capita_expend, is expected to take if we repeatedly take random samples and fit a linear regression model, assuming the null hypothesis

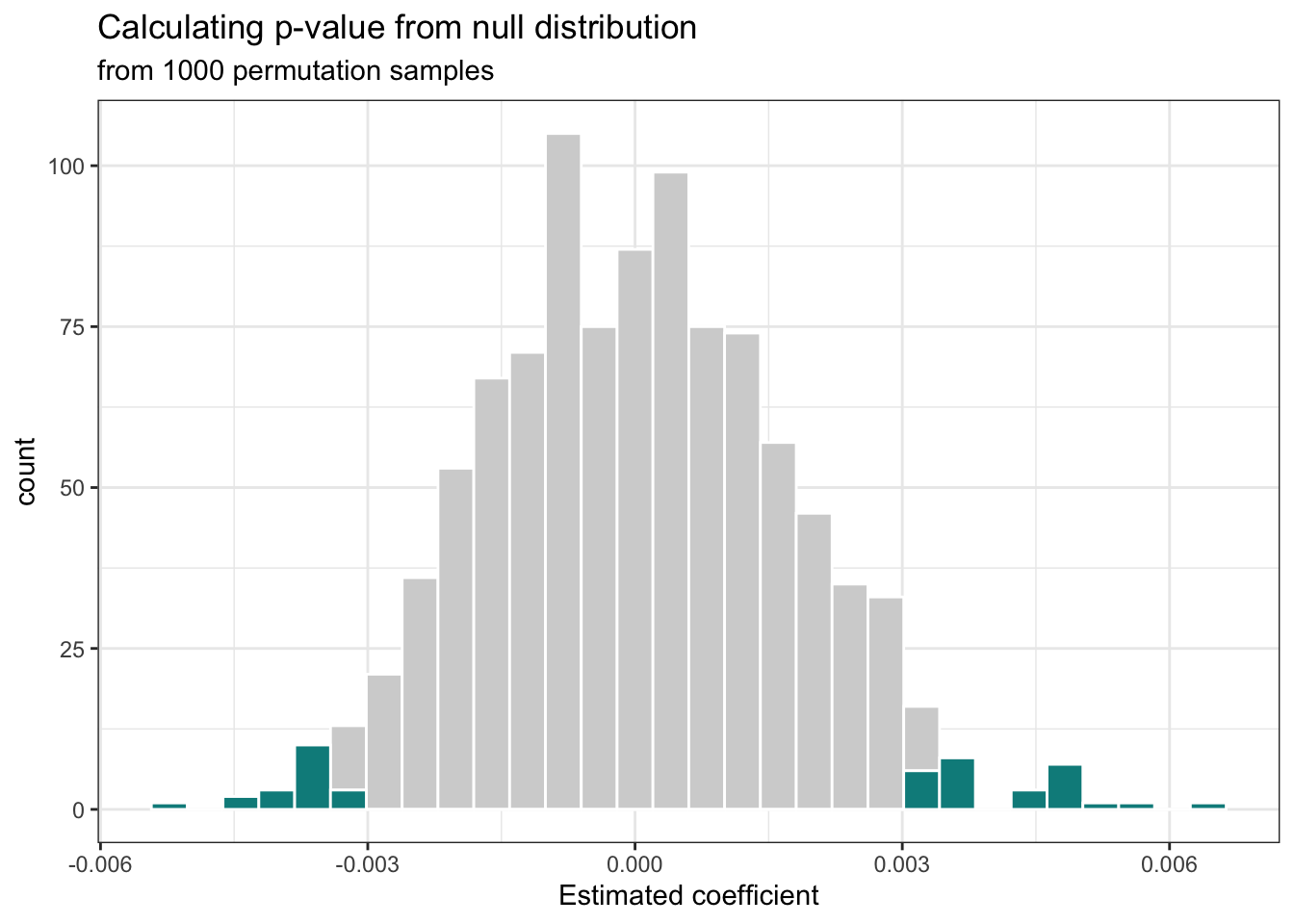

This comparison is quantified using a p-value. The p-value is the probability of observing estimated slopes at least as extreme as the value estimated from the sample data, given the null hypothesis is true. In the context of the parks data, the p-value is the probability of observing values of the slope that are at least as extreme as

In the context of statistical inference, the phrase “more extreme” means the area between the estimated value (

If

If

If

Recall from Section 5.6.1 that we are testing a two-sided alternative hypothesis. Therefore, we will calculate the p-value corresponding to the alternative hypothesis

The p-value for this hypothesis test is 0.046 and is shown by the dark shaded area in Figure 5.6.

Use the definition of the p-value at the beginning of this section to interpret the p-value of 0.046 in the context of the data.8

5.6.4 Draw conclusion

We ultimately want to evaluate the strength of evidence against the null hypothesis. The p-value is a measure of the strength of that evidence and is used to draw one of the following conclusions:

- If the p-value is “sufficiently small”, there is strong evidence against the null hypothesis. We reject the null hypothesis,

- If the p-value is not “sufficiently small”, there is not strong enough evidence against the null hypothesis. We fail to reject the null hypothesis,

We use a predetermined decision-making threshold called an

If

If

A commonly used threshold is

Back to the parks analysis. We will use the common threshold of

The p-value calculated in the previous section is 0.046. Therefore, we reject the null hypothesis

. The data provide sufficient evidence of a linear relationship between the amount a city spends per resident and the number of playgrounds per 10,000 residents.

5.6.5 Type I and Type II error

Regardless of the conclusion that is drawn (reject or fail to reject the null hypothesis), we have not determined that the null or alternative hypothesis are definitive truth. We have just concluded that the evidence (the data) has provided more evidence in favor of one conclusion versus the other. As with any statistical procedure, there is the possibility of making an error, more specifically a Type I or Type II error. Because we don’t know the value of the population slope, we will not know for certain whether we have made an error; however, understanding the potential errors that can be made can help inform the decision-making threshold

Table 5.10 shows how Type I and Type II errors correspond to the (unknown) truth and the conclusion drawn from the hypothesis test.

| Truth | |||

|---|---|---|---|

| Hypothesis test decision | Fail to reject |

Correct decision | Type II error |

| Reject |

Type I error | Correct decision |

A Type I error has occurred if the null hypothesis is actually true, but the p-value is small enough to reject the null hypothesis. The probability of making this type of error is the decision-making threshold

A Type II error has occurred if the alternative hypothesis is actually true, but we fail to reject the null hypothesis, because the p-value is large. Computing the probability of making this type of error is less straightforward. It is calculated as

In the context of the parks data, a Type I error is concluding that there is a linear relationship between per capita expenditure and playgrounds per 10,000 residents in the model, when there actually isn’t one in the population. A Type II error is concluding there is no linear relationship between per capita expenditure and playgrounds per 10,000 residents when in fact there is.

Given the conclusion in Section 5.6.4, is it possible we’ve made a Type I or Type II error?9

5.7 Relationship between confidence intervals and hypothesis tests

At this point, we might wonder whether there is any connection between the confidence intervals and hypothesis tests. Spoiler alert: there is!

Testing a claim with the two-sided alternative

If the null hypothesized value (

If the null hypothesized value is not within the range of the confidence interval, reject

This illustrates the power of confidence intervals; they can not only be used to draw a conclusion about a claim (reject or fail to reject

When we reject a null hypothesis, we conclude that there is a statistically significant linear relationship between the response and predictor variables. Concluding there is statistically significant relationship between the response and predictor, however, does not necessarily mean that the relationship is practically significant. The practical significance, how meaningful the results are in the real world, is determined by the magnitude of the estimated slope of the predictor on the response and what an effect of that magnitude means in the context of the data and analysis question.

5.8 Theory-based inference

Thus far we have approached inference using simulation-based methods (bootstrapping and permutation) to generate sampling distributions and null distributions. When certain conditions are met, however, we can use theoretical results about the sampling distribution to understand the variability in

5.8.1 Central Limit Theorem

The Central Limit Theorem (CLT) is a foundational theorem in statistics about the distribution of a statistic and the associated mathematical properties of that distribution. For the purposes of this text, we will focus on what the Central Limit Theorem says the distribution of an estimated slope

By the Central Limit Theorem, we know under certain conditions (more on these conditions in the Chapter 6)

Equation 5.6 means that by the Central Limit Theorem, we know that the sampling distribution of

where

The regression standard error

As you will see in the following sections, we will use this estimate of the sampling variability in the estimated slope to draw conclusions about the true relationship between the response and predictor variables based on hypothesis testing and confidence intervals.

5.8.2 Estimating

As discussed in Section 4.3.1, there are three parameters that need to be estimated for simple linear regression

By obtaining the estimates

You may have noticed that the denominator in Equation 5.8 is

Recall that the standard deviation is the average distance between each observation and the mean of the distribution. Similarly, the regression standard error can be thought of as the average distance the observed value the response is from the regression line. The regression standard error

5.8.3 Hypothesis test for the slope

The overall goals of hypothesis tests for a population slope are the same when using theory-based methods as previously described in Section 5.5. We define a null and alternative hypothesis, conduct testing assuming the null hypothesis is true, and draw a conclusion based on an evaluation of the strength of evidence against the null hypothesis. The main difference from the simulation-based approach in Section 5.6 is in how we quantify the variability in

The steps for conducting a hypothesis test based on the Central Limit Theorem are the following:

- State the null and alternative hypotheses.

- Calculate a test statistic.

- Calculate a p-value.

- Draw a conclusion.

As in Section 5.6 the goal is to use hypothesis testing to determine whether there is evidence of a statistically significant linear relationship between a response and predictor variable, corresponding to the two-sided alternative hypothesis of “not equal to 0”. Therefore, the null and alternative hypotheses are the same as defined in Equation 5.5

The next step is to calculate a test statistic. Similar to a

More specifically, in the hypothesis test for

To calculate the test statistic, the estimated slope is shifted by the mean, and then rescaled by the standard error. Let’s consider what we learn from the test statistic. Recall that by the Central Limit Theorem, the distribution of

Since the hypothesized mean is

Consider the magnitude of the test statistic,

Next, we use the test statistic to calculate a p-value and we will ultimately use the p-value to draw a conclusion about the strength of the evidence against the null hypothesis, as before. The test statistic,

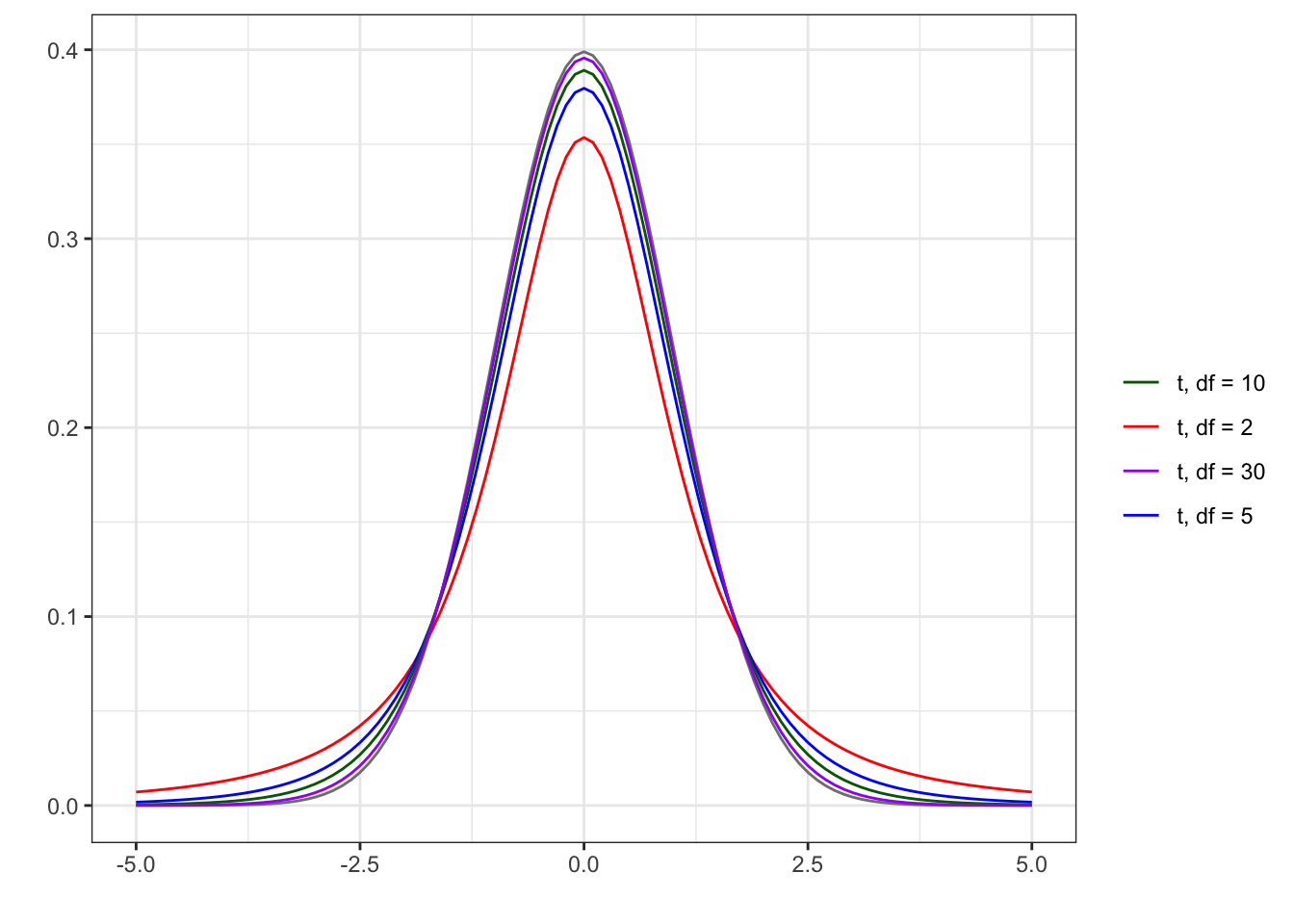

Though the sampling distribution of

Figure 5.7 shows the standard normal distribution

As described in Section 5.6.3, because the alternative hypothesis is “not equal to”, the p-value is calculated on both the high and low extremes of the distribution as shown in Equation 5.10.

We compare the p-value to a decision-making threshold

Now let’s apply this process to test whether there is evidence of a linear relationship between per capita expenditure and the number of playgrounds per 10,000 residents. As before, the null and and alternative hypotheses are

where per_capita_expend and playgrounds. The observed slope from Table 5.2, we know the observed slope

This test statistic means that assuming the true slope of per_capita_expend in this model is 0 and thus the mean of the distribution of

Given there are

Using a decision-making threshold per_capita_expend is not 0 in this model and that there is a statistically significant linear relationship between a city’s per capita expenditure and playgrounds per 10,000 residents in US cities.

Note that this conclusion is the same as in Section 5.6.4 using a simulation-based approach (even with small differences in the p-value). This is what we would expect, given these are the two different approaches for conducting the same inferential process. We are also conducting the tests under the same assumptions that the null hypothesis is true. The difference is in the methods available to quantify

5.8.4 Confidence interval

As with simulation-based inference, a confidence interval calculated based on the results from the Central Limit Theorem is an estimated range of the values that

The equation for a

where

In Section 5.8.1, we discussed

The critical value is the point on the

Let’s calculate the 95% confidence interval for the slope of per_capita_spend. There are 97 observations, so we use the

The interpretation is the same as before: We are 95% confident that the interval 0.0001 to 0.0065 contains the true slope for per_capita_expend. This means we are 95% confident that for each additional dollar increase in per capita expenditure, there are 0.0001 to 0.0065 more playgrounds per 10,000 residents, on average.

5.9 Inference in R

5.9.1 Bootstrap confidence intervals in R

The bootstrap distribution and confidence interval are computed using the infer package (Couch et al. 2021). Because bootstrapping is a random sampling process, the code begins with set.seed() to ensure the results are reproducible. Any integer value can go inside the set.seed function.

- 1

- Set a seed to make the results reproducible.

- 2

- Define the number of bootstrap samples (iterations). Bootstrapping can be computing intensive when using large data sets and a large number of iterations. We recommend using a small number of iterations ( 10 - 100) when testing code, then increasing the iterations once the code is finalized.

- 3

-

Specify the data set and save the bootstrap distribution in the object

boot_dist. - 4

- Specify the response and predictor variable.

- 5

- Specify the type of simulation (“bootstrap”) and the number of iterations.

- 6

- For each bootstrap sample, fit the linear regression model.

We can use ggplot to make a histogram of the bootstrap distribution.

Finally, we can compute the lower and upper bounds for the confidence interval using the quantile function. Note that the code includes ungroup() , so that the data are not grouped by replicate.

boot_dist |>

ungroup() |>

filter(term == "per_capita_expend") |>

summarise(lb = quantile(estimate, 0.025),

ub = quantile(estimate, 0.975))# A tibble: 1 × 2

lb ub

<dbl> <dbl>

1 0.000735 0.007115.9.2 Permutation tests in R

The null distribution and p-value for the permutation test are computed using the infer package (Couch et al. 2021). Much of the code to generate the null distribution is similar to the code for the bootstrap distribution. Because permutation sampling is a random process, the code starts with set.seed() to ensure the results are reproducible.

- 1

- Set a seed to make the results reproducible.

- 2

- Define the number of bootstrap samples (iterations). Permutation sampling can be computing intensive when using large data sets and a large number of iterations. We recommend using a small number of iterations ( 10 - 100) when testing code, then increasing the iterations once the code is finalized.

- 3

-

Specify the data set and save the null distribution in the object

null_dist. - 4

- Specify the response and predictor variable.

- 5

- Specify the null hypothesis of “independence”, corresponding to no linear relationship between the response and predictor variables.

- 6

- Specify the type of simulation (“permute”) and the number of iterations.

We can use ggplot to make a histogram of the null distribution.

Finally, we compute the the p-value using the get_p_value().

# get estimated slope

estimated_slope <- parks |>

specify(playgrounds ~ per_capita_expend) |>

fit()

# compute p-value

get_p_value(null_dist, estimated_slope, direction = "both") |>

filter(term == "per_capita_expend")# A tibble: 1 × 2

term p_value

<chr> <dbl>

1 per_capita_expend 0.0545.9.3 Theory-based inference in R

The output from lm() contains the statistics discussed in this section to conduct theory-based inference. The p-value in the output corresponds to the two-sided alternative hypothesis

The confidence interval does not display by default, but can be added using the conf.int argument in the tidy function. The default confidence level is 95%; it can be adjusted using the conf.level argument in tidy().

parks_fit <- lm(playgrounds ~ per_capita_expend, data = parks)

tidy(parks_fit, conf.int = TRUE, conf.level= 0.95)# A tibble: 2 × 7

term estimate std.error statistic p.value conf.low conf.high

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 2.42 0.214 11.3 3.11e-19 1.99 2.84

2 per_capita_expend 0.00330 0.00160 2.06 4.24e- 2 0.000115 0.00648In practice, we use the output from tidy() to get the confidence interval. To compute the confidence interval directly from the formula in Equation 5.11, we get estimate column of the tidy() output and std.error column. The critical value is computed using the qt function. the first argument is the cumulative probability (the percentile associated with the upper bound of the interval), and the degrees of freedom go in the second argument. For example, the critical value for the 95% confidence interval for the parks data used in Equation 5.12 is

[1] 1.995.10 Summary

In this chapter we introduced two approaches for conducting statistical inference to draw conclusions about a population slope, simulation-based methods and theory-based methods. The standard error, test statistic, p-value and confidence interval we calculated using the mathematical models from the Central Limit Theorem align with what it seen from the output produced by statistical software in Table 5.2. Modern statistical software will produce these values for you, so in practice you will not typically derive these values “manually” as we did in this chapter. As the data scientist your role will be to interpret the output and use it to draw conclusions. It’s still valuable, however, to have an understanding of where these values come from in order to interpret and apply them accurately. As more software has embedded artificial intelligence features, understanding how the values are computed also helps us check if the software’s output makes sense given the data, analysis objective, and methods.

Which of these two methods is preferred to use in practice? In the next chapter, we will discuss the model assumptions from Section 5.3 and the conditions we use to evaluate whether the assumptions hold for our data. We will use these conditions in conjunction with other statistical and practical considerations to determine when we might prefer simulation-based methods or theory-based methods for inference.

Example: I think the relationship is positive. I predict that if the city spends more per resident, some of the funding is used for facilities like playgrounds.↩︎

Slope: For each additional dollar a city spends per resident, is expected to be 0.003 more playgrounds per 10,000 residents, on average.

Intercept: We would not expect a city to invest $0 on services and facilities for its residents, so the interpretation of the intercept is not meaningful in practice.↩︎We sample with replacement so that we get a new sample each time we bootstrap. If we sampled without replacement, we would always end up with a bootstrap sample is exactly the same as the original sample.↩︎

Each bootstrap sample is the same size as our current sample data. In this case, the sample data we’re analyzing has 97 observations.↩︎

There are 1000 values, the number of iterations, in the bootstrapped sampling distribution.↩︎

The points at the

The variability is approximately equal in both distributions. This is expected, because the distributions will have the same variability but different centers.↩︎

Given there is no linear relationship between spending per resident and playgrounds per 10,000 residents (

It is possible we have made a Type I error, because we concluded to reject the null hypothesis.↩︎

Standard error is the term used for the standard deviation of a sampling distribution.↩︎

Note its similarities to the general equation for sample standard deviation,

Test statistics with small magnitude provide evidence in support of the null hypothesis, as they are close to the hypothesized value. Conversely test statistics with large magnitude provide evidence against the null hypothesis, as they are very far away from the hypothesized value.↩︎